AI researchers from Stanford and the University of Washington have developed a new reasoning model called s1, which was trained for under $50 using cloud compute credits, as detailed in a research paper released on January 30, 2025.

A unique approach in training s1 involved instructing it to 'wait' during its reasoning process, which significantly improved the accuracy of its answers.

This development highlights the affordability of creating AI models, especially when compared to previous methods that incurred significantly higher costs.

The commoditization of AI models raises concerns regarding the sustainability of large AI labs, as smaller teams can replicate complex models at minimal costs.

The s1 model was trained on a dataset of 1,000 curated questions and answers, taking less than 30 minutes with 16 Nvidia H100 GPUs, resulting in a training cost of approximately $20.

To create s1, the researchers utilized an off-the-shelf base model and refined it through model distillation, extracting reasoning capabilities from the responses of another AI model.

The research emphasizes that reasoning models can be effectively distilled from small datasets using supervised fine-tuning (SFT), offering a more economical alternative to large-scale reinforcement learning methods.

In terms of performance, the s1 model competes well with advanced models like OpenAI's o1 and DeepSeek's R1 in math and coding tasks, and it is publicly available on GitHub along with its training data and code.

Despite the cost-effective methods of model distillation, major tech companies like Meta, Google, and Microsoft are expected to invest hundreds of billions of dollars in AI infrastructure in 2025, indicating a continued need for substantial funding to drive AI innovation.

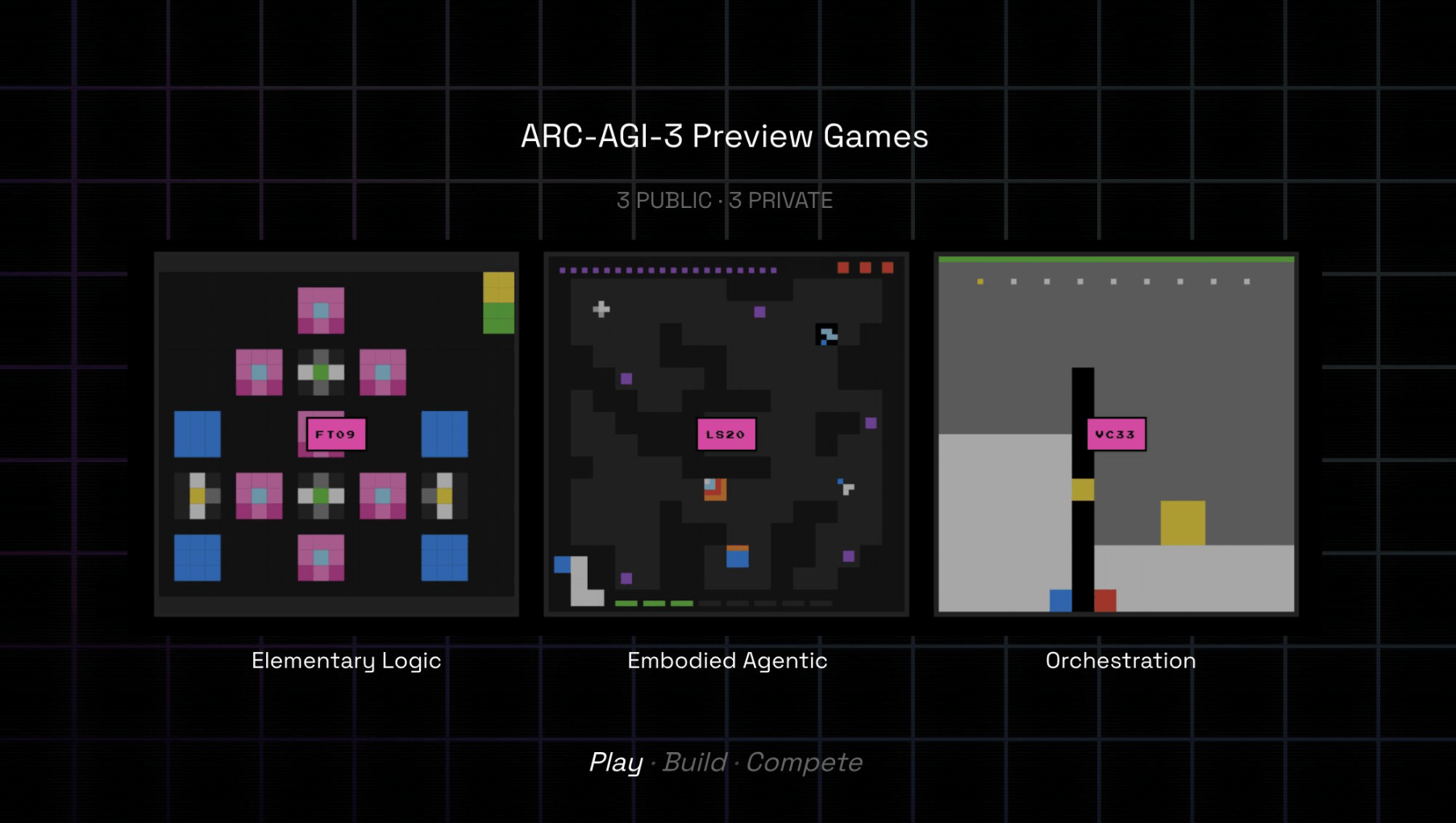

The research team focused on achieving strong reasoning performance and 'test-time scaling,' which enhances an AI's ability to think critically before answering questions.

Google offers free access to the Gemini 2.0 Flash Thinking Experimental model, but prohibits reverse-engineering for competitive purposes; a request for comment from Google is currently pending.