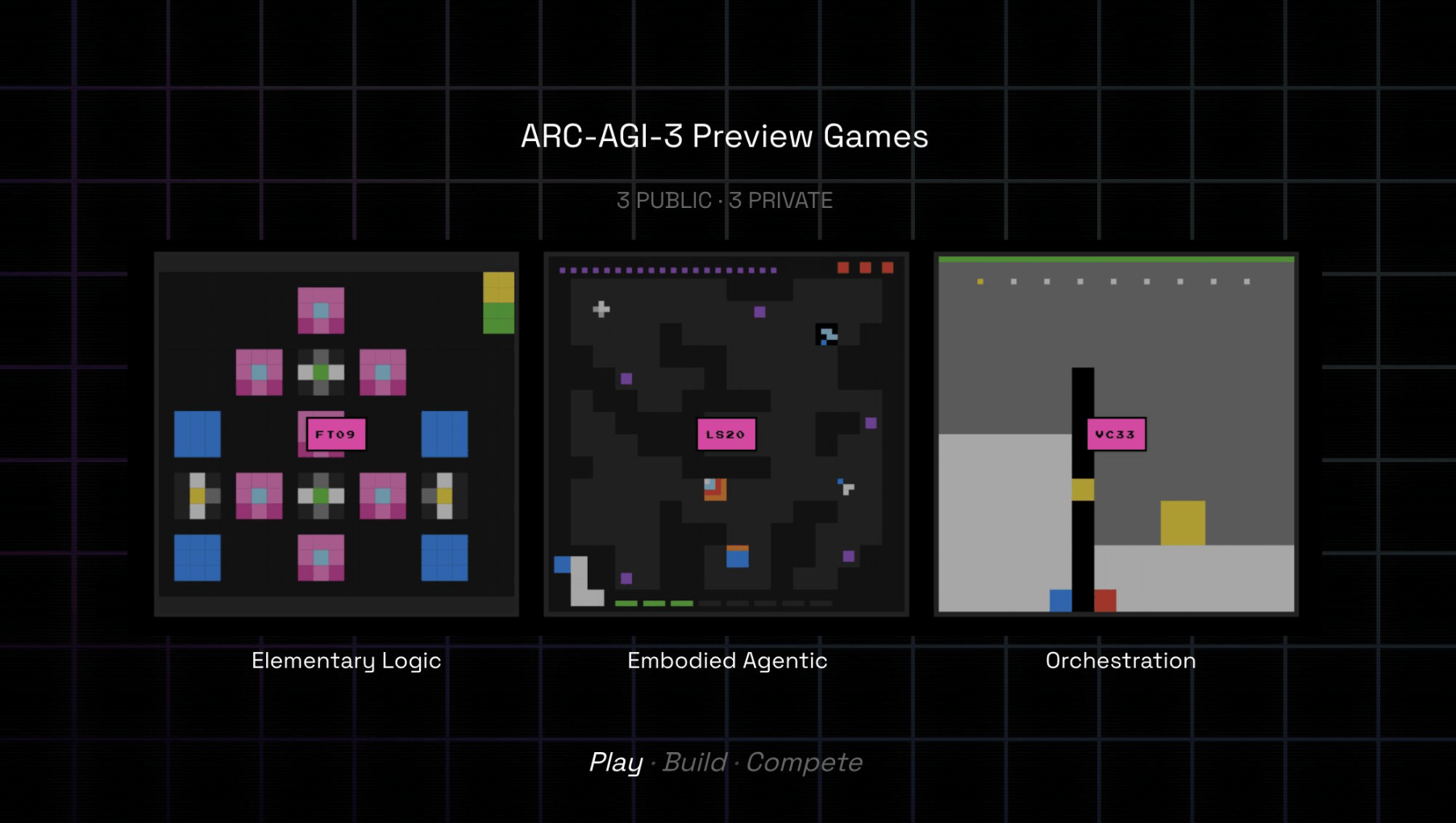

The recent launch of DeepSeek-R1 models by DeepSeek AI represents a significant advancement in generative AI, showcasing strong reasoning, coding, and natural language understanding capabilities.

These DeepSeek-R1 models are accessible through the Amazon Bedrock Marketplace and Amazon SageMaker JumpStart, with distilled variants available via Amazon Bedrock Custom Model Import.

To enhance the security of generative AI applications, Amazon Bedrock Guardrails can be integrated with these tools, aligning with responsible AI policies.

The guardrails provide configurable safeguards, including content filtering and sensitive information protection, ensuring a secure application environment.

To prevent misuse of these models, it is essential to enhance security measures, adhering to guidelines from OWASP LLM Top 10 and MITRE Atlas.

The guardrail policies encompass various filters, such as content, topic, word, and sensitive information filters, which help maintain content safety and user privacy.

Implementing a defense-in-depth strategy is crucial for mitigating risks associated with deploying foundation models like DeepSeek-R1, addressing potential vulnerabilities and malicious use.

Organizations utilizing these models must prioritize data privacy, bias management, and robust monitoring, particularly in regulated sectors such as healthcare and finance.

Regularly reviewing and updating security controls is vital to adapt to emerging threats in the AI landscape, ensuring the responsible use of generative AI.

Amazon Bedrock provides comprehensive security features, including data encryption, fine-grained access controls, and compliance certifications to protect open source models.